Building on Mann's idea of sousveillance - defined by him as “enhancing the ability of people to access and collect data about their surveillance and to neutralize surveillance” (Mann et al., 2003) - Browne presented the idea of “dark sousveillance” in her 2015 book Dark Matters: On the Surveillance of Blackness. She uses the term to describe the multitude of ways that enslaved Black Americans repurposed existing technologies to allow their escape and continued survival in times of slavery and abolition (Browne, 2015).

Although our social landscape has changed significantly since the times of slavery, the repercussions are still seen to this day in many laws and social attitudes. Data for Black Lives co-founder Milner reminds us that “The decision to make every Black life count as three-fifths of a person was embedded in the electoral college, an algorithm that continues to be the basis our current democracy” (Milner, 2018).

In this paper I will explore some of the ways that dark sousveillance is employed in the modern-day, and how we might see this impact the development of AI technologies going forward, in particular how Big Tech companies are moving towards physical models of technologies that will make avoiding surveillance harder for consumers.

In the 18th century, the social theorist Jeremy Bentham designed the panopticon - a prison structure of cell blocks surrounding a watchtower, giving prisoners the impression of being under constant surveillance (Goodman, 2014). The idea may seem distant from the modern nonincarcerated day-to-day, but we see modern policing techniques harkening back to this idea. A 2020 trial in London showed that officers patrolling the most high-crime underground platforms in 15-minute bursts resulted in crime dropping by 28 per cent in the following 4 days, even in times where the police were not present, described as a “phantom effect” - “crime would decline when people expect police to be present, even if they are not—based on recent patterns of police patrol” (Ariel et al., 2020).

Turning to internet culture, we can see that one of the ways the continued surveillance of black communities in the USA by (non-black) members of the general public manifests, is as viral content. including the meme “BBQ Becky”, which features a screenshot from a video of a white woman calling the police on a black family having a barbecue in a park in Oakland, California. The image has been photoshopped onto various other “faux offenses” such as “Barack Obama getting sworn into office, and even on the Black Panther as he greets cheering crowds at the Wakanda waterfalls” (Benjamin, 2019, p.34). And while the viral meme is used for comedic effect, it points towards the prevalence of such incidents, causing black people to turn towards filming the incidents in an attempt to deter any violence towards themselves.

As social media platforms have grown in popularity over the last two decades, and have been used for organising large-scale social revolutions, such as the Black Lives Matter (BLM) protests (Mundt et al., 2018), there has also been a rise in the same platforms imposing censorship to crack down on a variety of movements (Guardian, 2021). The Santa Clara Principles for “Transparency and Accountability in Content Moderation” were created in 2018, and ask that companies be open about how much they are moderating content, give users notice of moderation, and give users a chance to appeal (Santa Clara Principles, n.d.). However, as Jillian York points out “the majority of companies have endorsed the Principles ... only one - Reddit - has managed to implement them in full” (York, 2021).

Seeing the power of organisation and influence that they have, politicians have also seized their chance to use these platforms to their own advantage. One of the most infamous modern cases of social media's influence is that of the now defunct political consulting firm Cambridge Analytica, which was shown to have used large amounts of data on Facebook's users (estimated to be around 87 million users) to influence the 2016 American elections (Schroepfer, 2018).

In the 2021 book Discriminating Data: Correlation, Neighborhoods, and the New Politics of Recognition, Chun explores how the work done by Kosinki, Stillwell and Graepel to predict user's “identity categories” via analysing their Facebook likes in the 2013 study *Private Traits and Attributes are Predictable from Digital Records of Human Behaviour *(Kosinski et al., 2013) inspired the work of Cambridge Analytica in their microtargeting of advertisements on Facebook in the run-up to the election (Chun, 2021).

A Channel 4 report in 2018 revealed that as part of Donald Trump's 2016 election campaign, black voters were disproportionately represented in the “Deterrence” group of voters who they wanted to stay home on election day. “In Georgia, despite Black people constituting 32% of the population, they made up 61% of the ‘Deterrence’ category. In North Carolina, Black people are 22% of the population but were 46% of ‘Deterrence’.” (Channel 4 News, 2020). As Chun points out, “there are many similarities between twentieth-century eugenics and twenty-first century data analytics. Both emphasize data collection and surveillance, especially of impoverished populations; both treat the world as a laboratory; and both promote segregation” (Chun, 2021).

With the rise of such targeted surveillance, there has been a move from users to protect their data, and the Google Chrome browser plugin AdNaseum (AdNauseam, n.d.) explores the sousveillance tactic of generating enough noise in the data so as to make it worthless, simply blocking and clicking any ad presented to the user as they browse the web. In their paper Engineering Privacy and Protest: a Case Study of AdNauseam, the plugin's creators discuss the technical aspects of the plugin, but also the criticism they've faced “we note a palpable sense of indignation, one that appears to stem from the belief that human users have an obligation to remain legible to their systems, a duty to remain trackable. We see things differently … users should control the opacity of their actions, while powerful corporate entities should be held to the highest standards of transparency” (Howe & Nissenbaum, 2017).

The reason these systems are able to operate at scale is that they use AI for decision-making. Chun references the study *Computer-Based Personality Judgements are More Accurate than Those Made by Humans *(Youyou et al., 2015) and discusses the practice of using 90% of data to build linear regression models and then testing it against the other 10% to verify it's accuracy, stating that "Using this form of verification, standard for machine learning algorithms and models, means that if the captured and curated past is racist and sexist, these algorithms and models will only be verified as correct if they make sexist and racist predictions" (Chun, 2021).

Research in 2018 found that the COMPAS model used to predict rates of recidivism in US courts found that "Black defendants who did not recidivate were incorrectly predicted to re-offend at a rate of 44.9%, nearly twice as high as their white counterparts at 23.5%" (Dressel & Farid, 2018). But after all, isn't the model working as expected considering that the data used to verify it is biased (Pollock & Menard, 2015).

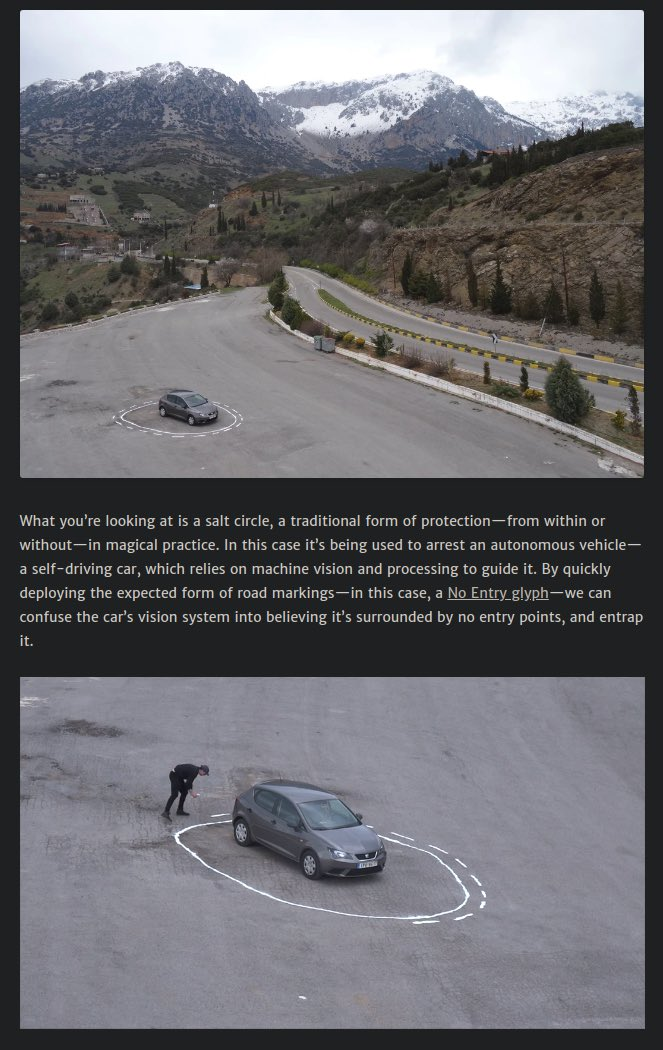

There has also been a rise in using facial recognition in policing (Fussey et al., 2021), and Browne points out the tactics of dark sousveillance that we see in tutorials online on how to subvert face recognition technology using makeup and other techniques, harkening back to the dazzle camouflage of navy ships in world war I - designed not as a form of crypsis, but instead to mislead viewers as to the subject's location and movements (Browne, 2015, p.163). This idea is also brought out to the general public in parodic tweets such as the one below, referencing ways of being able to “confuse” a self-driving car.

(c a i t (yassified), 2021)

(c a i t (yassified), 2021)

In the art exhibition Race Cards, Selina Thompson hand-writes 1000 cards about the ideas of race and racism permeating our current society. Written in a semi-chronological stream of consciousness. Thompson asks questions such as

"What does it mean to be black"

"When somebody tells me I'm playing the race card what are they really telling me"

"What is an appropriate response to our shared colonial history"

"What is it to hear somebody say 'I can't breathe' and decide not to loosen the headlock"

The exhibition is re-written each time it is displayed, and invites attendees to consider what the cards might contain if the exhibition was displayed in a hundred years' time, particularly since later questions touch on the internet and recent protests, asking questions such as "Will the internet have long term impact on racial discourse, and will that be a good or bad thing?"

In recent years, we have seen the general public become savvier to the vast amounts of surveillance that is being done on them at all times, particularly as reports around things such as the Cambridge Analytica scandal have made their way into the public eye. Along with that, we've seen the continuation of popular media discussing surveillance (and sousveillance), with topics covered in Orwell's popular fiction book 1984 now being presented in their non-fiction form in media such as Netflix's 2020 documentary The Social Dilemma.

There are also more formalised movements springing up within tech and wider communities, including the Data for Black Lives Movement (Data for Black Lives, n.d.), and the newly-formed Distributed AI Research Institute (DAIR), described by founder Timnit Gebru as "a space for independent, community-rooted AI research free from Big Tech’s pervasive influence" (Gebru, n.d.).

As the general public becomes ever-more concerned with privacy and starts to move towards adopting more privacy-focussed technology, Big Tech is sure to push towards creating environments that make personal data harder to obscure.

One of the most obvious examples of this is in the rise of smart assistants released by many of the big players in tech - Amazon's Alexa, Google's Google Home, Apple's Siri and Facebook's Portal. It's no coincidence that these companies all offer their smart assistant products at such low costs, when the actual product is the huge amount of personal data they can gain from having a 24/7 live feed into many people's homes, under the guise of providing convenience to users. As Daniel Hövermann points out in The Social Dilemma - "If you’re not paying for the product, then you are the product" (Orlowski, 2020).

One of the functions of these smart assistants is also to answer questions and thus this also gives them the power to decide what information we receive. As Stucke and Ezrachi point out in Alexa et al., What Are You Doing with My Data? "We don’t pay the digital butler’s salary. Google, Apple, Facebook, and Amazon, are likely covering the digital butler’s cost. They control the data and algorithm, access the information, and can engage in behavioral discrimination. In doing so, its gatekeeper power increases in controlling the information we receive" (Stucke & Ezrachi, 2018)

With this consideration, we can perhaps count ourselves thankful that plans by children's toymaker Mattel for a smart assistant for children Aristotle were shelved after a campaign organised by Campaign for a Commercial-Free Childhood (as US non-profit organisation) demanded Mattel not release the Aristotle. In a letter to Mattel, the organisation argued that the product “attempts to replace the care, judgment and companionship of loving family members with faux nurturing and conversation from a robot designed to sell products and build brand loyalty” (Hern, 2017).

In another example of companies attempting to encroach into even more aspects of people's lives, the Amazon Fresh stores that we see popping up in metropolitan areas around the world, promise to make shopping easy with "just walk out" technology (Amazon, n.d.). This means that cameras keep track of what customers have picked, and allow them to walk out without having to check out, instead paying afterwards through their Amazon account. It seems that Crawford and Joler's note rings true that "As human agents, we are visible in almost every interaction with technological platforms. We are always being tracked, quantified, analyzed and commodified" (Crawford & Joler, 2018). Inside an Amazon Fresh store, every look, every facial expression, every conversation can all be monitored and analysed. Is it any wonder that other retailers are following suit? Just this year we've seen the first GetGo store by Tesco the first till-free Sainsbury's, both in London, which promise the same "just walk out" experience for customers.

With a huge amount of profits at stake for companies and the prospect of losing all semblance of privacy for consumers, the fight is on between Big Tech in wanting to reach the ultimate level of surveillance, and consumers, who now more than ever need to employ every tactic of sousveillance to counteract these efforts.

Bibliography

AdNauseam. (n.d.). AdNauseam—Clicking Ads So You Don’t Have To. Retrieved 8 December 2021, from http://adnauseam.io

Amazon. (n.d.). Just Walk Out. Bringing Just Walk Out Shopping to Your Stores. Retrieved 8 December 2021, from https://justwalkout.com/

Ariel, B., Sherman, L. W., & Newton, M. (2020). Testing hot-spots police patrols against no-treatment controls: Temporal and spatial deterrence effects in the London Underground experiment. Criminology, 58(1), 101–128. https://doi.org/10.1111/1745-9125.12231

Benjamin, R. (2019). Race after technology: Abolitionist tools for the new Jim code. Polity.

Browne, S. (2015). Dark matters: On the surveillance of blackness. Duke University Press.

c a i t (yassified). (2021, September 17). This is far and away the funniest post about teslas https://t.co/4WQvM0Nx6Q [Tweet]. @kittynouveau. https://twitter.com/kittynouveau/status/1438837949349646336

Channel 4 News. (2020, September 28). Revealed: Trump campaign strategy to deter millions of Black Americans from voting in 2016. Channel 4 News. https://www.channel4.com/news/revealed-trump-campaign-strategy-to-deter-millions-of-black-americans-from-voting-in-2016

Chun, W. H. K. (2021). Discriminating data: Correlation, neighborhoods, and the new politics of recognition. The MIT Press.

Crawford, K., & Joler, V. (2018). Anatomy of an AI System. Anatomy of an AI System. http://www.anatomyof.ai

Data for Black Lives. (n.d.). Data 4 Black Lives. Retrieved 8 December 2021, from https://d4bl.org/

Dressel, J., & Farid, H. (2018). The accuracy, fairness, and limits of predicting recidivism. Science Advances, 4(1), eaao5580. https://doi.org/10.1126/sciadv.aao5580

Fussey, P., Davies, B., & Innes, M. (2021). ‘Assisted’ facial recognition and the reinvention of suspicion and discretion in digital policing. The British Journal of Criminology, 61(2), 325–344. https://doi.org/10.1093/bjc/azaa068

Gebru, T. (n.d.). The DAIR Institute. Retrieved 8 December 2021, from https://www.dair-institute.org/

Goodman, D. (2014). Panopticon. Encyclopedia of Critical Psychology, 1318–1320. https://doi.org/10.1007/978-1-4614-5583-7_464

Guardian. (2021, January 12). Twitter suspends 70,000 accounts sharing QAnon content. The Guardian. http://www.theguardian.com/technology/2021/jan/12/twitter-suspends-70000-accounts-sharing-qanon-content

Hern, A. (2017, October 6). ‘Kids should not be guinea pigs’: Mattel pulls AI babysitter. The Guardian. https://www.theguardian.com/technology/2017/oct/06/mattel-aristotle-ai-babysitter-children-campaign

Howe, D., & Nissenbaum, H. (2017, January 1). Engineering Privacy and Protest: A Case Study of AdNauseam.

Kosinski, M., Stillwell, D., & Graepel, T. (2013). Private traits and attributes are predictable from digital records of human behavior. Proceedings of the National Academy of Sciences, 110(15), 5802–5805. https://doi.org/10.1073/pnas.1218772110

Mann, S., Nolan, J., & Wellman, B. (2003). Sousveillance: Inventing and Using Wearable Computing Devices for Data Collection in Surveillance Environments. Surveillance & Society, 1(3), 331–355. https://doi.org/10.24908/ss.v1i3.3344

Milner, Y. (2018, April 7). An Open Letter to Facebook from the Data for Black Lives Movement. Medium. https://medium.com/@YESHICAN/an-open-letter-to-facebook-from-the-data-for-black-lives-movement-81e693c6b46c

Mundt, M., Ross, K., & Burnett, C. M. (2018). Scaling Social Movements Through Social Media: The Case of Black Lives Matter. Social Media + Society, 4(4), 2056305118807911. https://doi.org/10.1177/2056305118807911

Orlowski, J. (2020, September 9). The Social Dilemma—A Netflix Original documentary. In The Social Dilemma. Netflix. https://www.thesocialdilemma.com/

Pollock, W., & Menard, S. (2015). Perceptions of Unfairness in Police Questioning and Arrest Incidents: Race, Gender, Age, and Actual Guilt. Journal of Ethnicity in Criminal Justice, 13(3), 237–253. https://doi.org/10.1080/15377938.2015.1015197

Santa Clara Principles. (n.d.). Santa Clara Principles on Transparency and Accountability in Content Moderation. Santa Clara Principles. Retrieved 8 December 2021, from https://santaclaraprinciples.org/images/santa-clara-OG.png

Schroepfer, M. (2018, April 4). An Update on Our Plans to Restrict Data Access on Facebook. Meta. https://about.fb.com/news/2018/04/restricting-data-access/

Stucke, M. E., & Ezrachi, A. (2018). Alexa et al., What Are You Doing with My Data? Critical Analysis of Law, 5(1), Article 1. https://cal.library.utoronto.ca/index.php/cal/article/view/29509

York, J. (2021, May 1). Free for All. Offscreen Magazine, 24.

Youyou, W., Kosinski, M., & Stillwell, D. (2015). Computer-based personality judgments are more accurate than those made by humans. Proceedings of the National Academy of Sciences of the United States of America, 112(4), 1036–1040. https://doi.org/10.1073/pnas.1418680112