A little over 50 years ago, a computer programming student called Charley Kline sat down at a computer in UCLA and sent the first message over the internet, to a computer 400 miles away at the Stanford Research Institute. Intended to be the word "login", the computer crashed after the first two letters were sent. And thus lo the internet was born.

In its early days, the internet was small, so much so that there was a time that you could get a directory of everyone on the internet printed into a handy manual. At that time it was simply another microcosm in a world full of them, and very few people could have predicted what an all-encompassing thing the internet would become. Early adopters envisioned the internet as being largely a place for storing information, a high-tech library of sorts, but few could have predicted the advent of social media, and just how prevalent tech would become in so many people's lives.

Now several decades later, we live in a world where technology is ubiquitous, the "internet of things" is ever-broadening, and where most urbanised (and indeed rural) populations rely heavily on technology to facilitate their daily lives. The sheer amount of data circulating on the internet dwarfs the amount of data that has been produced at any previous time. A 2018 report by DOMO estimates that over 25 quintillion bytes of data are created a day. [1]

In 2016 I read an article entitled "The code I’m still ashamed of" [2] and it struck a chord with me. At that time, I was just starting my journey into software engineering and there was one quote that stood out to me - "As developers, we are often one of the last lines of defence against potentially dangerous and unethical practices". For a while it was a little abstract - I'd never worked on any products in a capacity that would really allow me to have a huge ethical impact, but the quote always stayed with me, so much so that in every job I started I would always have it written on the very first page of my notebook as a daily reminder to myself. Years later, I've now joined a health tech company and suddenly the work that I do has a very strong impact – in many cases impacting someone’s ability to access life-saving healthcare.

The idea of ethics being part of modern culture, in general, is not particularly new - popular media often explores the ideas of morals and how to "do what's right" - from the children's movie "Inside Out" exploring a person’s inner forces, to the TV show "The Good Place", which goes quite deep into introducing specific philosophers and their ideologies.

However, in academics ethics is largely studied within the discipline of philosophy, and so it can feel quite removed from the STEM fields as a whole, which tend to be much more concerned with the hard facts, as opposed to more open-ended interpretations of concepts. This attitude continues through to software engineering in general - gone are the days where software goes through lengthy life cycles with review by management and legal teams. Instead, modern software development practices involve having extremely short life cycles ("agile working") and a lot of engineers these days deploy code and features directly themselves to the users. This is in stark contrast to most of the other engineering disciplines, which have years-long (or even decades-long) life cycles, with multiple layers of oversight.

As technology progresses to the point of having a significant impact on society, it becomes ever more important to introduce a code of ethics to the work that engineers do, the Facebook-Cambridge Analytica scandal [3] being a good example of massive societal change enacted by a singular tech platform. As an engineer at a big company, it can be very easy to feel that the small piece of work you do will have a relatively low impact, but we can see how combined, the full machine can have a huge impact.

In response to this, in 2018 Stack Overflow added some new questions to their Annual Developer Survey [4] around ethics and AI, surveying over 100,000 developers on their attitudes to the ethics of their work. While only a small percentage (less than 5%) said that they would write code that was explicitly for an unethical purpose, we also can see a lack of responsibility on developers’ parts, with only 20% considering the developer writing the code as the person ultimately responsible for the code.

This perhaps makes more sense when we consider the idea that a lot of software engineers don’t particularly see the direct impact of their work. Often there are many layers of researchers and managers sitting between developers and users, and in many tech company cultures it’s not considered the norm for engineers to talk directly to users and understand how they are engaging with the products.

The introduction of the internet also means that entrepreneurs are no longer limited to targeting small groups of local users, but instead can construct their user base from the entire world’s population – currently, it’s estimated that more than 60% of the world’s population has internet access [5]. This means more users who are often living in very different cultures and societies than those of the people creating the technologies, further increasing the gap between software engineers and their understanding of the impact of their products. While being able to operate at this scale has the promise of people being able to enact a huge amount of global good, it also creates the flip side of being able to cause great harm, especially when ethics are not the primary consideration of a wide-reaching product.

Kant’s categorical imperative talks about only acting in a way that we would also be happy to have everyone else acting as well. When we think of engineers doing things like selling user data to third parties to fund their product, it seems clear that they probably wouldn’t want another developer doing the same to them, but when they are unable to directly see the consequences of this on other people’s lives, then it becomes easy to consider this an acceptable practice.

A natural suggestion for improving this would be to introduce a code of ethics for software engineering, much like the codes of conduct that are applied to other branches of engineering. But once we look past the surface-level of the problem, we can see that this is more complicated than it first seems, not least because ethics as a field itself is in constant fluctuation, with there never being a singular right answer to an ethical problem.

Looking at the vast varieties of frameworks, it’s also clear that no single framework will apply neatly to all technologies and situations. The idea of moral particularism is quite applicable here, as we can see the breadth of scenarios that arise from using technology, and how we couldn’t possible just hardwire a rigid set of rules into any and all new technologies. Instead, we should consider taking a more open-ended approach - building a wider knowledge of ethics into the process of engineering and allow engineers themselves to use that knowledge and make decisions accordingly.

It should be noted that there have been attempts at introducing codes of conduct to tech over the years, including the ACM Code of Ethics [6] and the BCS Code of Conduct [7], but these continue to be not very comprehensive, and not have a huge amount of uptake when it comes to the making of digital products or being enforced.

Part of the issue is the sheer speed and scale at which technology is evolving, in less than a century we have gone from having no electronic computing devices to having a world full of computers, in every aspect of our societies. These computers have gone from simple calculating machines to intelligence that is comparable to that of humans when it comes to many tasks.

Our notions of AI and robotics in many ways pre-date our technical capacities - whether that's Čapek's R.U.R, the Golem of folklore, or Asimov's humanoid robots. Modern media also encourages these ideas of sentience in technology, whether it's the Netflix show "Maniac" featuring an AI that falls in love with its creator, or the BBC's audio series "Forest 404" - with robots so advanced that they don't even realise they aren't human. These all give rise to wider questions around what humanity even is, particularly as we see ourselves reaching a point in the not-too-distant future where there will be nothing that a human can do that a robot cannot also do.

But people's ideas around "robots seizing control" are often very far from the reality of what that would look like, which seems more likely to be humans happily ceding control to machines. We already allow technology to make a huge number of decisions for us every day - what we see on our social media feeds, what we watch on Netflix, what route we take to work. A takeover of technology from humans is already very much in-flight, and popular media such as "Black Mirror" gives an arguably much more realistic idea of how the future of tech's influence on society could actually look.

Modern technology also presents an alternative to having functionality decided on creation (“Show the user the most recent result”) – instead handing the decision of how to tackle a problem is in the hands of more flexible algorithms, particularly with the introduction of neural networks in recent years. The question arises as to how we would even be able to govern these sorts of technologies when their abilities are determined during their usage as opposed to at the start. Providing an underlying rulebook does not seem a viable option when we consider the issues discussed above with trying to decide on an ethical framework in the first place.

In Asimov's fictional world, he presents "3 Laws of Robotics"

· A robot may not injure a human being or, through inaction, allow a human being to come to harm.

· A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

· A robot must protect its own existence as long as such protection does not conflict with the First or Second Law

At the time these seemed fairly reasonable, but looking at them almost a century later, we can see how limited they are when it comes to thinking about the impact of tech on society on a larger scale. Artificial intelligence can encounter many problems that aren't simply around whether something would cause harm to a single human or not, but instead, there are likely to be many problems akin to the "trolley problem", even in a very literal way as we consider that self-driving cars could face problems that are almost identical to various iterations of the trolley problem.

Stepping back from the concept of AI, we can look more broadly at the effect of technology and algorithms as a whole. Social media platforms now have a huge impact on real-life, and algorithms that intend to drive continued user engagement often succeed at the cost of continually reinforcing a user's existing biases. This serves to amplify the effects of people with dangerous political views, who can easily find each other, reinforce their views and co-ordinate their efforts on spreading their ideologies. We see the real-life fallout as the lines between people's digital lives and their real lives blur – cognitive echo-chambers online have resulted in many disruptive events in recent years, be it the storming of the US Capitol or mass shootings from people radicalised online.

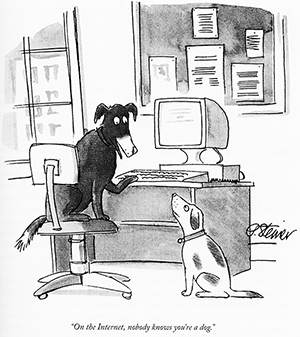

Legal suggestions to contain these issues aren’t always very easy to reason about either. Recent discussions around requiring people to verify their ID to make a social media account work fine when we’re just thinking about reducing anonymous "trolling” but fail to take into account the understanding that many people, particularly those from persecuted groups, rely on anonymity on the internet to get help, and this suggestion would instead put them at risk of being doxed.

[8]

[8]

Algorithms also don’t exist in the vacuum, but in the real world with existing biases, many of which have been present for a long time and been compounded over time. There can be the perception that an algorithm will be "less biased" than a person when it comes to making decisions, but considering that most algorithms are trained with real-world datasets, it makes it likely that an algorithm that isn’t considered carefully could just reinforce an existing bias.

This is particularly dangerous when we think of cases such as algorithms that decide bail amounts or which candidates to progress in job interviews. Phrenology has long been debunked as a pseudoscience but threatens to rear its head again in the physiognomy of algorithms that claim that they can assess criminality on facial features alone. Even a benign optimisation function for an algorithm could have unintentionally bad outcomes, e.g. if the algorithm at a private fund was simply given the task to maximise the value of its portfolio, it could simply short stocks for consumer good, go long on defence stocks and then start a war. I think most people would agree that this is hardly an ideal outcome socially, even if it has succeeded in its task.

A good example of well-intentioned automation falling flat is of social media platforms creating "throwback reels" as a fun way to see your year. These seem fun at first but fall very short of understanding the possible negative repercussions of these - no one who has experienced a death of a loved one wants to see a throwback reel with them featured in it, and many platforms don't even allow a way to opt-out of such things.

Working in health-tech has been a massive catalyst for me to think about these things a bit more – ethics is a longstanding part of medicine, and this stands out in stark contrast to the tech industry, both in the presence (or lack thereof) of a code of ethics, and in the attitudes towards the necessities of having such a code. The fact that medicine has been around for a while is an important part of why there are more refined codes of conduct – they have been revised over time, and new medical staff have numerous examples of how to act in different situations, with formalised training programs and supervised learning being the norm in learning to be a doctor who operates alone.

Historically in medicine, there have been cases that serve to push medical ethics forward, such as the Tuskegee scandal[9], and we are only just starting to see the same emerging in tech, where things like the Snowden Leak and the Theranos scam have started opening the floor to the discussion around ethics, something that has largely stayed off the radar of a lot of people in the industry until now. We even see the topic beginning to be discussed in a wider forum, with the popular documentary "The Social Dilemma" generating a lot of buzz last year as people outside the tech industry were exposed to the idea of surveillance capitalism and just how much of their data is used by large tech corporations.

It's also important to note one of the key differences in the application of codes of conduct between medicine and tech - medical professionals need a licence to practice, whereas software engineers do not. This is largely considered a strength of the industry in terms of the jobs being accessible to a wider group of people, but by extension of this, it also holds that there is no way of revoking someone’s ability to write software even if they did break a fundamental rule.

Recent years have seen a sharp rise in health-tech products – there are now many services used to automate triaging of medical incidents, and apps that are used to predict and manage medical conditions, whether it’s services to manage repeat prescriptions, or apps to track periods and fertility. On one hand, these provide a valuable service to patients and doctors alike, particularly in health systems that are already stretched to capacity, but on the other hand, things like automated triage and analysis can have dramatic consequences for patients. Under-diagnosing of something life-threatening such as whether a tumour is cancerous can mean causing preventable deaths, but over-diagnosing is not the solution either as it can mean people getting unnecessary treatment, causing both trauma and undesirable side effects, and also potentially taking away treatment from others.

Medicine uses the concept of a quality-adjusted life-year (QALY) to assess the value of giving healthcare, and this can fairly easily be broadened out to algorithms used to triage and decide on healthcare. But it doesn’t resolve the problem of who is ultimately responsible for a wrong decision taken in a patient’s care – a doctor who gives incorrect treatment can be struck off, but how would this work if it’s an algorithm that’s caused the problem?

In the late ‘90s, in response to rising concerns around the misuse of patient data, Dame Fiona Caldicott formulated some principles around dealing with patient data with regards to technology, and these have been adopted formally within the medical industry to act as a guide to the use of technology to facilitate healthcare.

-

Justify the purpose(s) for using confidential information

-

Use confidential information only when it is necessary

-

Use the minimum necessary confidential information

-

Access to confidential information should be on a strict need-to-know basis

-

Everyone with access to confidential information should be aware of their responsibilities

-

Comply with the law

-

The duty to share information for individual care is as important as the duty to protect patient confidentiality

-

Inform patients and service users about how their confidential information is used

These have served as an example of a code of conduct applied to technology, and one of the most interesting ones is principle 7 – it highlights that being too reluctant to share data could be just as detrimental to a patient as over-sharing and that a balance has to be struck between respecting a patient’s privacy without adding too many barriers to their care plan.

So how should we as an industry be moving forward? In my opinion, a shift has to be made in the way we consider ethics and its applicability to the tech industry

- We need to think of tech, and in particular the ever more prevalent use of artificial intelligence, as a driver for our societal systems. To bring technologies safely and sustainably to scale, we need to be proactive about incorporating diverse voices into the creation of these things and start the conversation from the very beginning about ethics and how best to approach things responsibly.

- We need to turn ethics consideration into a part of the tech design process. Much in the same way that tech has embraced having psychologists work on user experience and writers work on technical writing, we also need to bring philosophers into the field to lead the way in considering ethics in any new product.

- We need to have tech experts in positions to inform and shape decisions around policy. When there is no input from policy-makers, then tech creates a de-facto set of rules around what is allowed, and this allows unethical practices to grow unfettered. The questioning of Zuckerberg in the US Congress in 2018 was a shining example of how modern lawmakers often understand very little about technology as many people there showed very little understanding of the role of ads in funding companies, asking "How do you sustain a business model in which users don't pay for your service?"

- We need a code of conduct that is widely adopted across the globe, with genuine repercussions for building software that breaks a law of tech ethics. Ethics also needs to be formally part of computer science qualifications, as in most places around the world this is still not required for a certified degree. The same goes for coding bootcamps and online courses as these continue gaining prevalence as a pathway into tech - studying ethics needs to be considered part of the requirement to practice software engineering. Aristotle spoke about a virtuous friend being a "second self" - if we are surrounded by software engineers thinking of the ethics of their products then we will naturally gravitate towards also having that mindset, thus creating an industry where it becomes much harder to knowingly or unknowingly write unethical technology at all.

[1] https://www.socialmediatoday.com/news/how-much-data-is-generated-every-minute-infographic-1/525692/

[2] https://www.freecodecamp.org/news/the-code-im-still-ashamed-of-e4c021dff55e/

[3] https://en.wikipedia.org/wiki/Facebook%E2%80%93Cambridge_Analytica_data_scandal

[4] https://insights.stackoverflow.com/survey/2018/

[5] https://internetworldstats.com/stats.htm

[6] https://www.acm.org/code-of-ethics

[7] https://www.bcs.org/membership/become-a-member/bcs-code-of-conduct/

[8] New Yorker, 5th July 1993

[9] https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study